|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

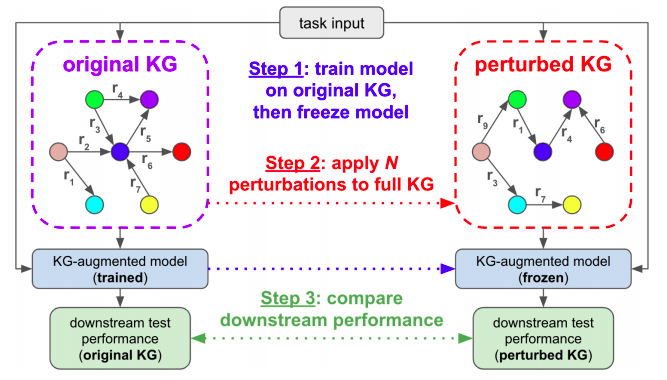

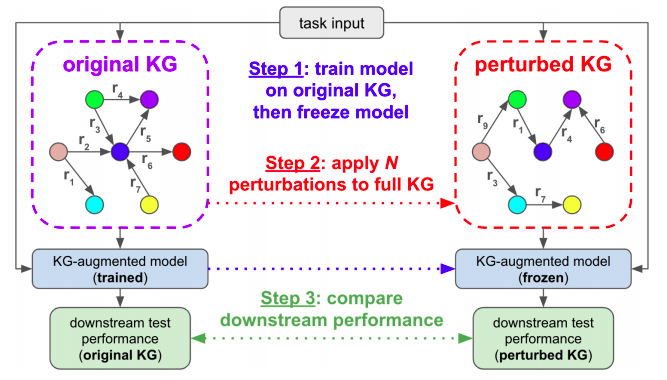

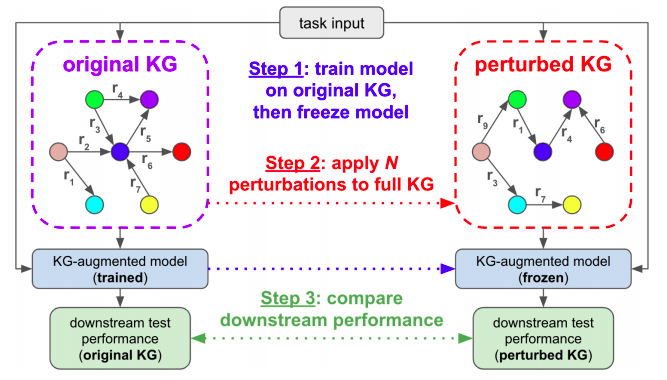

Mrigank Raman, Aaron Chan, Siddhant Agarwal, Peifeng Wang, Hansen Wang, Sungchul Kim, Ryan Rossi, Handong Zhao, Nedim Lipka, Xiang Ren Learning to Deceive Knowledge Graph Augmented Models via Targeted Pertubation Ninth International Conference on Learning Representations, 2021 [PDF] [Code] |