|

|

|

|

|

|

|

|

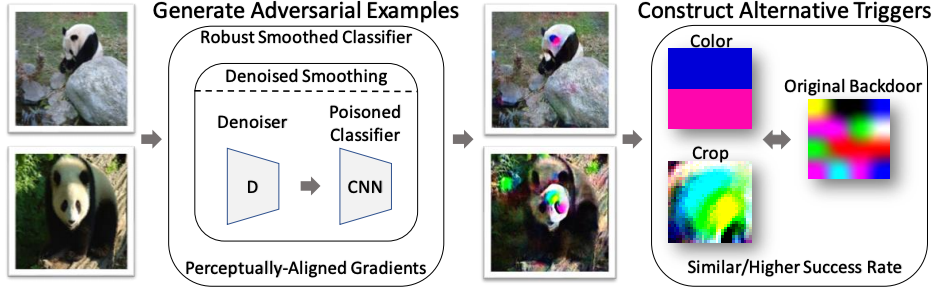

Minjie Sun, Siddhant Agarwal, Zico Kolter Poisoned Classifiers are not only backdoored, they are fundamentally broken Workshop on Security and Safety in Machine Learning systems at Ninth International Conference on Learning Representations (ICLR), 2021 [ArXiv] [Code] |